Yi-Coder is an open-source, high-performance code language model designed for efficient coding. It supports 52 programming languages and excels in tasks requiring long-context understanding, such as project-level code comprehension and generation. The model comes in two sizes—1.5B and 9B parameters—and is available in both base and chat versions.

In this tutorial, you’ll learn how to

- Run the Yi-coder model locally with an OpenAI-compatible API

- Use Yi-coder to power Cursor

Cursor is one of the hottest AI code editors. It relies on LLM specially trained for coding tasks, like Yi coder, to accomplish coding assistance tasks. You can configure Yi-coder-9B as your own private LLM backend for Cursor.

Run Yi-coder model locally with an OpenAI-compatible API

To get a public HTTPs service endpoint for the local Yi-coder-9B, which is required by Cursor, follow the instructions below.

Install an open source Gaia node – a collection of lightweight and portable LLM inference tools.

Gaia’s tech stack is built on top of WasmEdge, a WebAssembly-based runtime optimized for serverless computing and edge applications. This setup allows for efficient deployment of Yi-Coder in different environments, providing flexibility and scalability.

curl -sSfL 'https://github.com/GaiaNet-AI/gaianet-node/releases/latest/download/install.sh' | bash

Then, use the following command line to download and initialize the model.

gaianet init --config https://raw.githubusercontent.com/GaiaNet-AI/node-configs/main/yi-coder-9b-chat/config.json

Finally, use gaianet start to start running the node.

gaianet start

Then, you will get a HTTPS URL, which looks like https://NODE-ID.us.gaianet.network.

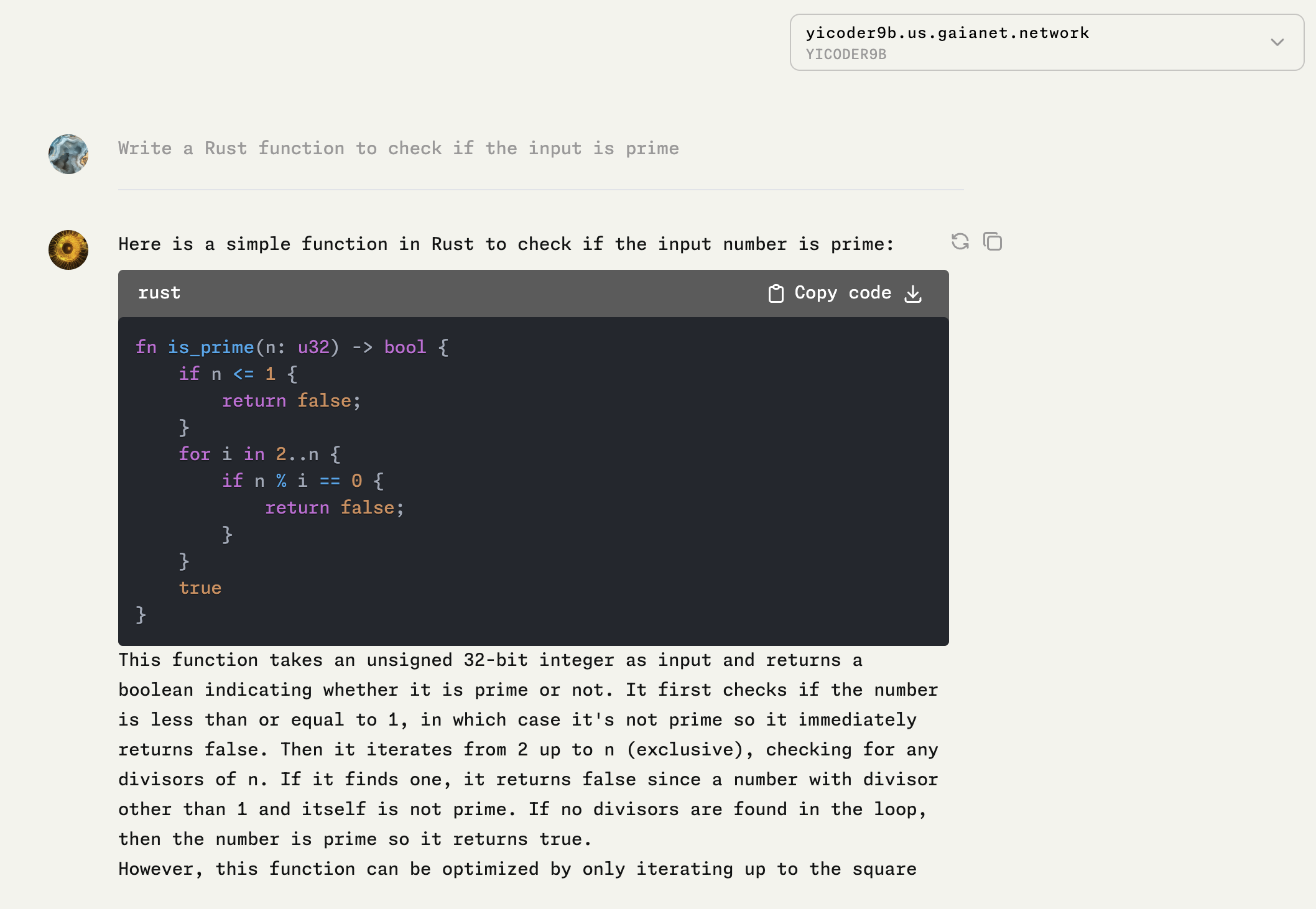

In the same time, you can open your browser to http://localhost:8080 to ask questions about programming.

We started the Yi Coder 9b model with a 8k context window. If you have a machine with a large GPU RAM (eg 24GB), you could increase the context size all the way to 128k. A large context size is especially useful in coding since we might need to cram a lot of source code files into the prompt for the LLM to accomplish a complex task.

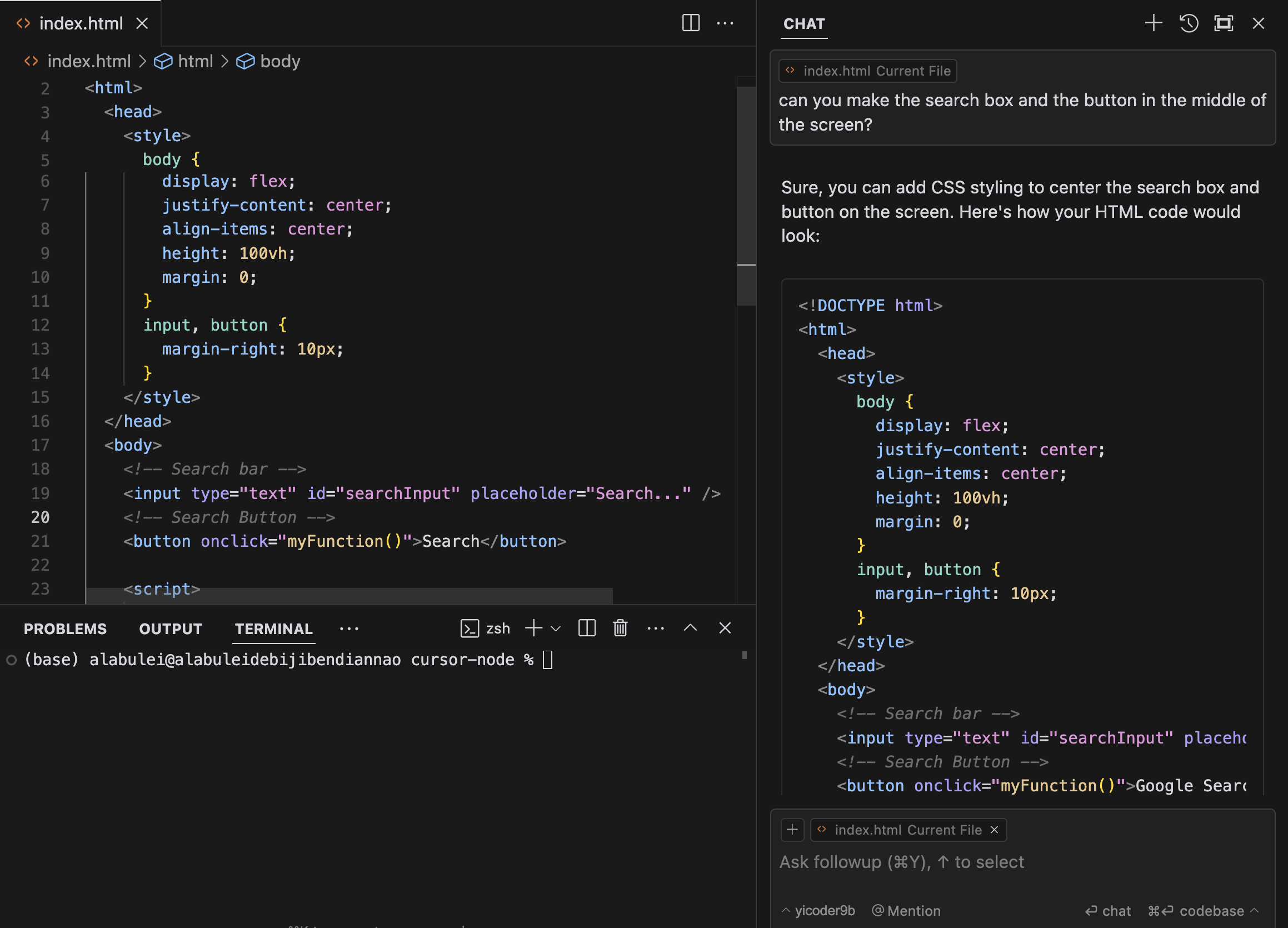

Integration Yi-coder-9B with Cursor

Next, let’s configure the Cursor using the Yi-Coder-9B running on our own machine.

Simply use your Gaia node URL to override Cursor’s default OpenAI URL, fix the model name, and the “API key”, you in business! See detailed instruction here.

Now, let’s test the Yi-coder-9b to write a simple search page.

I prompted the model to generate a simple search page.

I prompted the model to generate a simple search page.

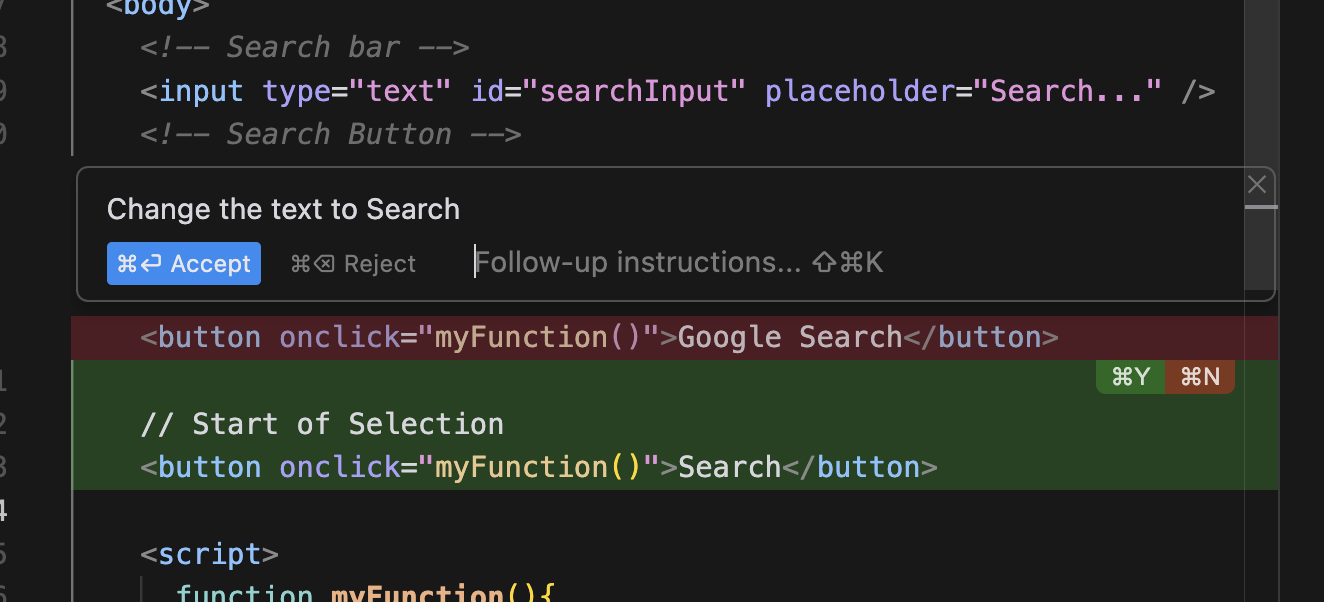

Ask the Yi-coder-9b to change the text label on the button.

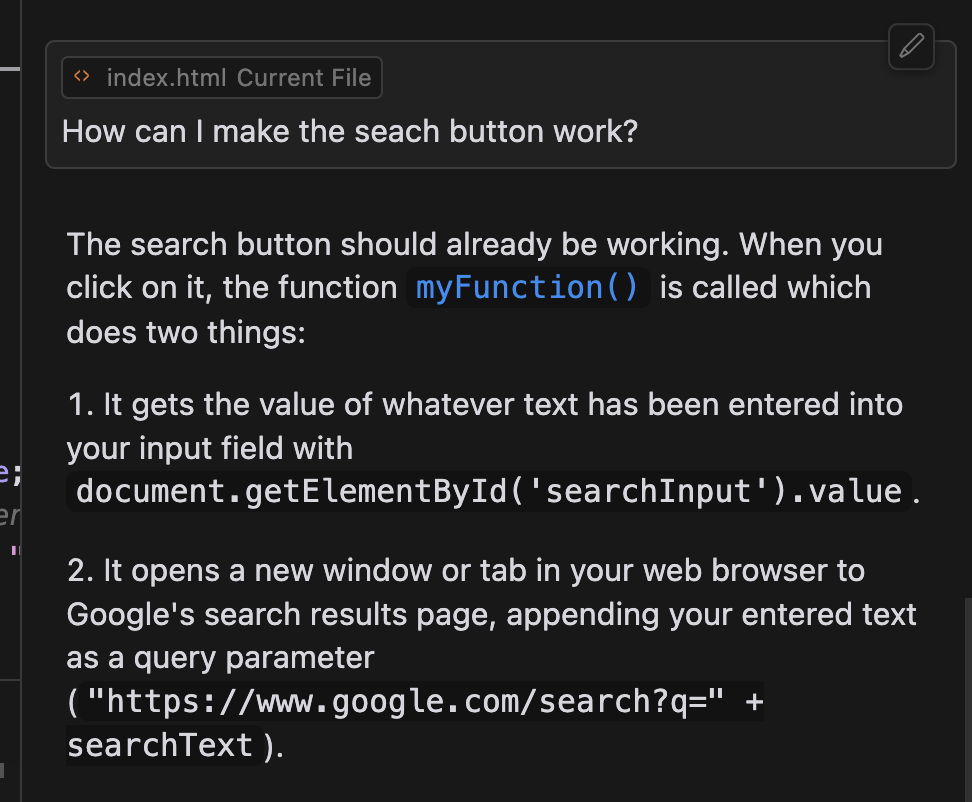

The Yi-coder-9b LLM explains to me how the search button works.

The Yi-coder-9b LLM explains to me how the search button works.

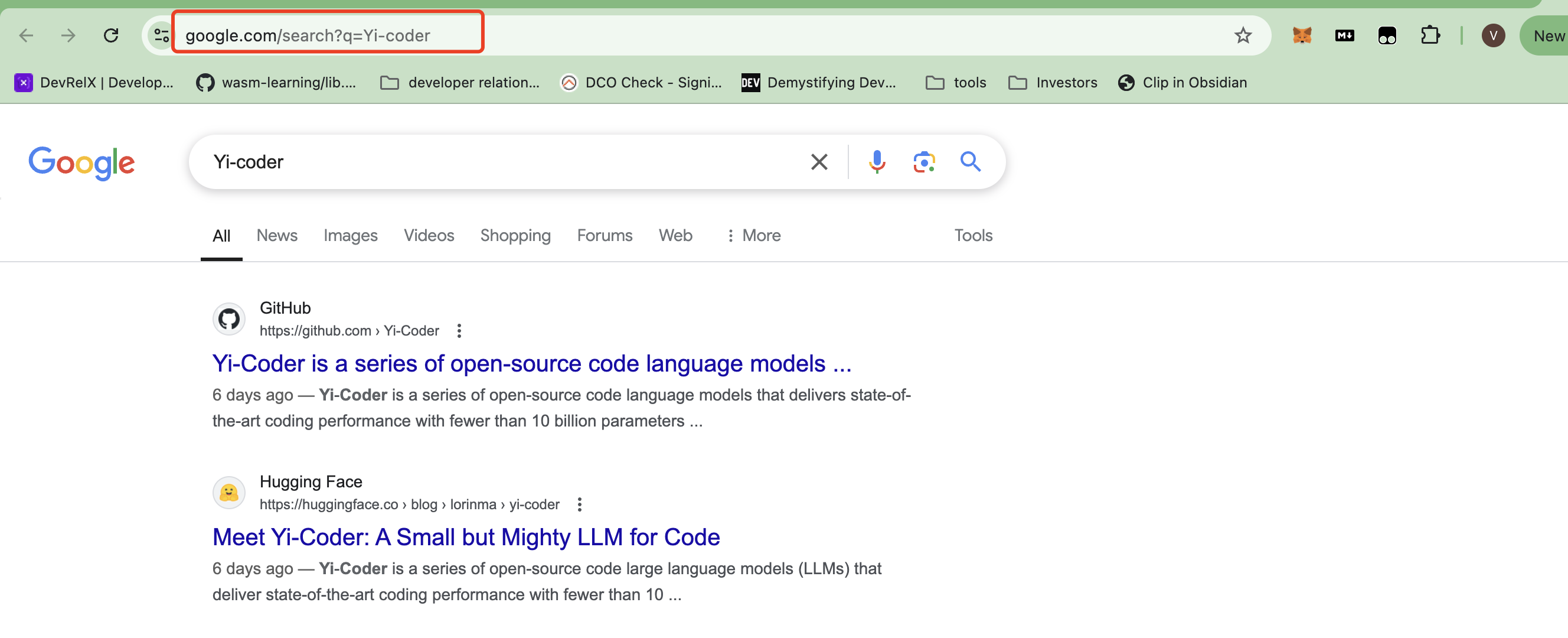

The web page works as advertised!

The web page works as advertised!

That’s it! Learn more from the LlamaEdge docs. Join the WasmEdge discord to ask questions and share insights.