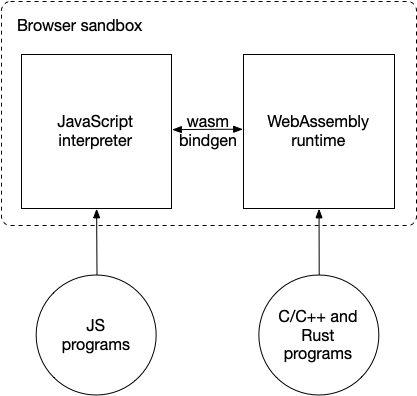

WebAssembly started as a “JavaScript alternative for browsers”. The idea is to run high-performance applications compiled from languages like C/C++ or Rust safely in browsers. In the browser, WebAssembly runs side by side with JavaScript.

Figure 1. WebAssembly and JavaScript in the browser.

As WebAssembly is increasingly used in the cloud, it is now a universal runtime for cloud-native applications. Compared with Docker-like application containers, WebAssembly runtimes achieve higher performance with lower resource consumption. The common uses cases for WebAssembly in the cloud include the following.

- Runtime for serverless function-as-a-service (FaaS)

- Embedding user-defined functions into SaaS apps or databases

- Runtime for sidecar applications in a service mesh

- Programmable plug-ins for web proxies

- Sandbox runtimes for edge devices including software-defined vehicles and smart factories

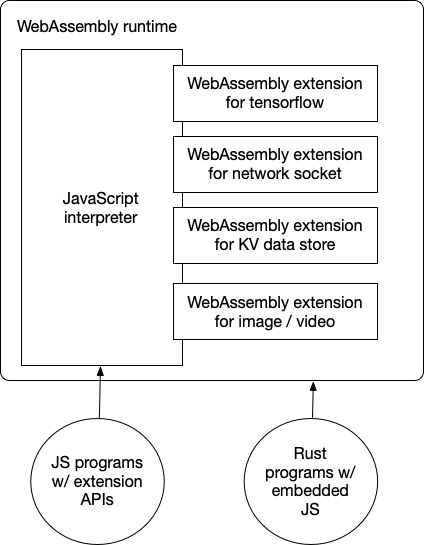

However, in those cloud-native use cases, developers often want to use JavaScript to write business applications. That means we must now support JavaScript in WebAssembly. Furthermore, we should support calling C/C++ or Rust functions from JavaScript in a WebAssembly runtime to take advantage of WebAssembly’s computational efficiency. The WasmEdge WebAssembly runtime allows you to do exactly that.

Figure 2. WebAssembly and JavaScript in the cloud.

WasmEdge

WasmEdge is a leading cloud-native WebAssembly runtime hosted by the CNCF (Cloud Native Computing Foundation) / Linux Foundation. It is the fastest WebAssembly runtime in the market today. WasmEdge supports all standard WebAssembly extensions as well as proprietary extensions for Tensorflow inference, KV store, and image processing, etc. Its compiler toolchain supports not only WebAssembly languages such as C/C++, Rust, Swift, Kotlin, and AssemblyScript but also regular JavaScript.

A WasmEdge application can be embedded into a C program, a Go program, a Rust program, a JavaScript program, or the operating system’s CLI. The runtime can be managed by Docker tools (eg CRI-O), orchestration tools (eg K8s), serverless platforms (eg Vercel, Netlify, AWS Lambda, Tencent SCF), and data streaming frameworks (eg YoMo and Zenoh).

Now, you can run JavaScript programs in WasmEdge powered serverless functions, microservices, and AIoT applications! It not only runs plain JavaScript programs but also allows developers to use Rust and C/C++ to create new JavaScript APIs within the safety sandbox of WebAssembly.

Building a JavaScript engine in WasmEdge

First, let’s build a WebAssmbly-based JavaScript interpreter program for WasmEdge. It is based on QuickJS with WasmEdge extensions, such as network sockets and Tensorflow inference, incorporated into the interpreter as JavaScript APIs. You will need to install Rust to build the interpreter.

If you just want to use the interpreter to run JavaScript programs, you can skip this section. Make sure you have installed Rust and WasmEdge.

Fork or clone the wasmegde-quickjs Github repository to get started.

$ git clone https://github.com/second-state/wasmedge-quickjs

Following the instructions from that repo, you will be able to build a JavaScript interpreter for WasmEdge.

# Install GCC

$ sudo apt update

$ sudo apt install build-essential

# Install wasm32-wasi target for Rust

$ rustup target add wasm32-wasi

# Build the QuickJS JavaScript interpreter

$ cargo build --target wasm32-wasi --release

The WebAssembly-based JavaScript interpreter program is located in the build target directory. You can now try a simple “hello world” JavaScript program (example_js/hello.js), which prints out the command line arguments to the console.

args = args.slice(1)

print("Hello", ...args)

Run the hello.js file in WasmEdge’s QuickJS runtime as follows. Note, the --dir .:. on the command line is to give wasmedge permission to read the local directory in the file system for the hello.js file.

$ cd example_js

$ wasmedge --dir .:. ../target/wasm32-wasi/release/wasmedge_quickjs.wasm hello.js WasmEdge Runtime

Hello WasmEdge Runtime

ES6 module support

The WasmEdge QuickJS runtime supports ES6 modules. The example_js/es6_module_demo folder in the GitHub repo contains an example. The module_def.js file defines and exports a simple JS function.

function hello(){

console.log('hello from module_def.js')

}

export {hello}

The module_def_async.js file defines and exports an aysnc function and a variable.

export async function hello(){

console.log('hello from module_def_async.js')

return "module_def_async.js : return value"

}

export var something = "async thing"

The demo.js file imports functions and variables from those modules and executes them.

import { hello as module_def_hello } from './module_def.js'

module_def_hello()

var f = async ()=>{

let {hello , something} = await import('./module_def_async.js')

await hello()

console.log("./module_def_async.js `something` is ",something)

}

f()

To run the example, you can do the following on the CLI.

$ cd example_js/es6_module_demo

$ wasmedge --dir .:. ../../target/wasm32-wasi/release/wasmedge_quickjs.wasm demo.js

hello from module_def.js

hello from module_def_async.js

./module_def_async.js `something` is async thing

CommonJS support

The WasmEdge QuickJS runtime supports CommonJS (CJS) modules. The example_js/simple_common_js_demo folder in the GitHub repo contains several examples.

The other_module/main.js file defines and exports a simple CJS module.

print('hello other_module')

module.exports = ['other module exports']

The one_module/main.js file uses the CJS module.

print('hello one_module');

print('dirname:',__dirname);

let other_module_exports = require('../other_module/main.js')

print('other_module_exports=',other_module_exports)

Then the file_module.js file imports the module and runs it.

import * as one from './one_module/main.js'

print('hello file_module')

To run the example, you need to build a WasmEdge QuickJS runtime with CJS support.

$ cargo build --target wasm32-wasi --release --features=cjs

Finally, do the following on the CLI.

$ cd example_js/simple_common_js_demo

$ wasmedge --dir .:. ../../target/wasm32-wasi/release/wasmedge_quickjs.wasm file_module.js

hello one_module

dirname: one_module

hello other_module

other_module_exports= other module exports

hello file_module

NodeJS module support

With CommonJS support, we can run NodeJS modules in WasmEdge too. The simple_common_js_demo/npm_main.js demo shows how it works. It utilizes the third-party md5 and mathjs modules.

import * as std from 'std'

var md5 = require('md5');

console.log(__dirname);

console.log('md5(message)=',md5('message'));

const { sqrt } = require('mathjs')

console.log('sqrt(-4)=',sqrt(-4).toString())

print('write file')

let f = std.open('hello.txt','w')

let x = f.puts("hello wasm")

f.flush()

f.close()

In order to run it, we must first use the vercel ncc tool to build all dependencies into a single file. The build script is package.json.

{

"dependencies": {

"mathjs": "^9.5.1",

"md5": "^2.3.0"

},

"devDependencies": {

"@vercel/ncc": "^0.28.6"

},

"scripts": {

"ncc_build": "ncc build npm_main.js"

}

}

Now, install ncc and npm_main.js dependencies via NPM, and then build the single JS file in dist/index.js.

$ npm install

$ npm run ncc_build

ncc: Version 0.28.6

ncc: Compiling file index.js

Run the JS file with NodeJS imports in WasmEdge CLI as follows.

$ wasmedge --dir .:. ../../target/wasm32-wasi/release/wasmedge_quickjs.wasm dist/index.js

dist

md5(message)= 78e731027d8fd50ed642340b7c9a63b3

sqrt(-4)= 2i

write file

Next, let’s try a few more advanced JavaScript programs.

A JavaScript networking client example

The interpreter supports the WasmEdge networking socket extension so that your JavaScript can make HTTP connections to the Internet. Here is an example of JavaScript.

let r = GET("http://18.235.124.214/get?a=123",{"a":"b","c":[1,2,3]})

print(r.status)

let headers = r.headers

print(JSON.stringify(headers))let body = r.body;

let body_str = new Uint8Array(body)

print(String.fromCharCode.apply(null,body_str))

To run the JavaScript in the WasmEdge runtime, you can do this on the CLI.

$ cd example_js

$ wasmedge --dir .:. ../target/wasm32-wasi/release/wasmedge_quickjs.wasm http_demo.js

You should now see the HTTP GET result printed on the console.

A JavaScript networking server example

Below is an example of JavaScript running a HTTP server listening at port 3000.

import {HttpServer} from 'http'

let http_server = new HttpServer('0.0.0.0:8000')

print('listen on 0.0.0.0:8000')

while(true){

http_server.accept((request)=>{

let body = request.body

let body_str = String.fromCharCode.apply(null,new Uint8Array(body))

print(JSON.stringify(request),'\n body_str:',body_str)

return {

status:200,

header:{'Content-Type':'application/json'},

body:'echo:'+body_str

}

});

}

To run the JavaScript in the WasmEdge runtime, you can do this on the CLI. Since it is a server, you should run it in the background.

$ cd example_js

$ nohup wasmedge --dir .:. ../target/wasm32-wasi/release/wasmedge_quickjs.wasm http_server_demo.js &

Then you can test the server by querying it over the network.

$ curl -d "WasmEdge" -X POST http://localhost:8000

echo:WasmEdge

You should now see the HTTP POST body printed on the console.

A JavaScript Tensorflow inference example

The interpreter supports the WasmEdge Tensorflow lite inference extension so that your JavaScript can run an ImageNet model for image classification. Here is an example of JavaScript.

import {TensorflowLiteSession} from 'tensorflow_lite'

import {Image} from 'image'let img = new Image('./example_js/tensorflow_lite_demo/food.jpg')

let img_rgb = img.to_rgb().resize(192,192)

let rgb_pix = img_rgb.pixels()let session = new TensorflowLiteSession('./example_js/tensorflow_lite_demo/lite-model_aiy_vision_classifier_food_V1_1.tflite')

session.add_input('input',rgb_pix)

session.run()

let output = session.get_output('MobilenetV1/Predictions/Softmax');

let output_view = new Uint8Array(output)

let max = 0;

let max_idx = 0;

for (var i in output_view){

let v = output_view[i]

if(v>max){

max = v;

max_idx = i;

}

}

print(max,max_idx)

To run the JavaScript in the WasmEdge runtime, you can do the following on the CLI to re-build the QuickJS engine with Tensorflow and then run the JavaScript program with Tensorflow API.

$ cargo build --target wasm32-wasi --release --features=tensorflow

... ...

$ cd example_js/tensorflow_lite_demo

$ wasmedge-tensorflow-lite --dir .:. ../../target/wasm32-wasi/release/wasmedge_quickjs.wasm main.js

label:

Hot dog

confidence:

0.8941176470588236

Note:

- The

--features=tensorflowcompiler flag builds a version of the QuickJS engine with WasmEdge Tensorflow extensions. - The

wasmedge-tensorflow-liteprogram is part of the WasmEdge package. It is the WasmEdge runtime with the Tensorflow extension built in.

You should now see the name of the food item recognized by the TensorFlow lite ImageNet model.

Make it faster

The above Tensorflow inference example takes 1–2 seconds to run. It is acceptable in web application scenarios but could be improved. Recall that WasmEdge is the fastest WebAssembly runtime today due to its AOT (Ahead-of-time compiler) optimization. WasmEdge provides a wasmedgec utility to compile the wasm file to a native so shared library. You can use wasmedge to run the so file instead of wasm file to get much faster performance.

The following example uses the extended versions to wasmedge and wasmedgec to support the WasmEdge Tensorflow extension.

$ cd example_js/tensorflow_lite_demo

$ wasmedgec-tensorflow ../../target/wasm32-wasi/release/wasmedge_quickjs.wasm wasmedge_quickjs.so

$ wasmedge-tensorflow-lite --dir .:. wasmedge_quickjs.so main.js

label:

Hot dog

confidence:

0.8941176470588236

You can see that the image classification task can be completed within 0.1s. It is at least 10x improvement!

The

soshared library is not portable across machines and OSes. You should runwasmedgecandwasmedgec-tensorflowon the machine you deploy and run the application.

A note on QuickJS

Now, the choice of QuickJS as our JavaScript engine might raise the question of performance. Isn’t QuickJS a lot slower than v8 due to a lack of JIT support? Yes, but …

First of all, QuickJS is a lot smaller than v8. In fact, it only takes 1/40 (or 2.5%) of the runtime resources v8 consumes. You can run a lot more QuickJS functions than v8 functions on a single physical machine.

Second, for most business logic applications, raw performance is not critical. The application may have computationally intensive tasks, such as AI inference on the fly. WasmEdge allows the QuickJS applications to drop to high-performance WebAssembly for these tasks while it is not so easy with v8 to add such extensions modules.

Third, it is known that many JavaScript security issues arise from JIT. Maybe turning off JIT in the cloud-native environment is not such a bad idea!

What’s next?

The examples demonstrate how to use the wasmedge-quickjs.wasm JavaScript engine in WasmEdge. Besides using the CLI, you could use Docker / Kubernetes tools to start the WebAssembly application or to embed the application into your own applications or frameworks as we discussed earlier in this article.

In the next several articles, I will focus on using JavaScript together with Rust to make the most out of both languages.

- Incorporating JavaScript into a Rust app

- Creating high-performance JavaScript APIs using Rust

- Calling native functions from JavaScript

JavaScript in cloud-native WebAssembly is still an emerging area in the next generation of cloud and edge computing infrastructure. We are just getting started! If you are interested, join us in the WasmEdge project (or tell us what you want by raising feature request issues).