Today, Mistral AI released mathstral, a finetuned 7B model specifically designed for math reasoning and scientific discovery. The model has a 32k context window. The model weights are available under the Apache 2.0 license.

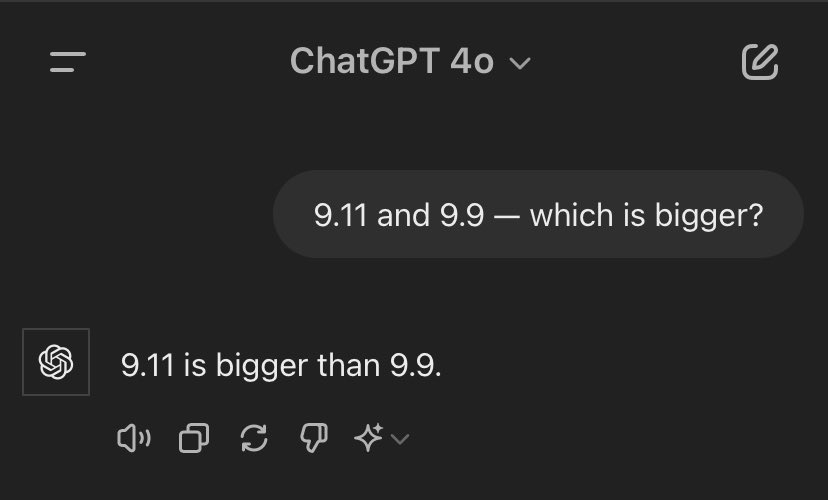

As we have seen, leading edge LLMs, such as the GPT-4o, can solve very complex math problems. But do they have common sense? A meme that has been going around on the Internet suggests that LLMs can only pretend to solve “math Olympiad level” problems since it lacks understanding of even elementary school math. The case in point is that most LLMs, including GPT-4o, cannot tell whether 9.11 or 9.8 is bigger!

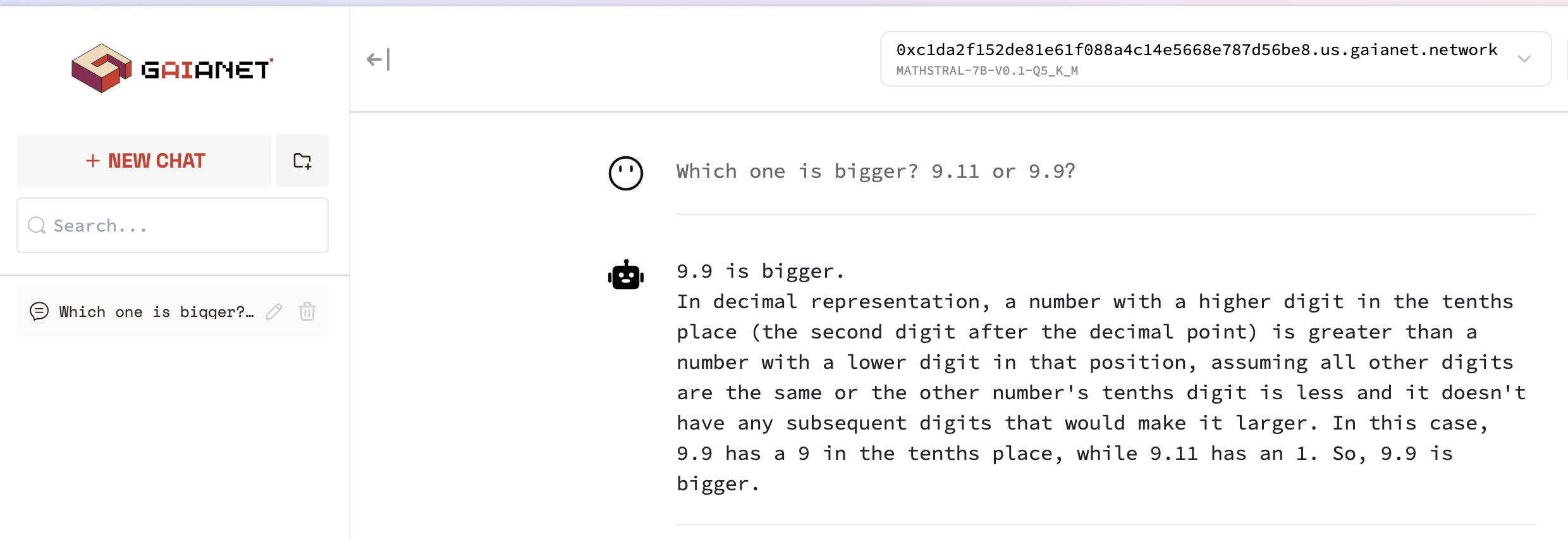

Can mathstral answer this common sense question? Let’s download and run the mathstral model locally and test its ability.

We will use LlamaEdge (the Rust + Wasm stack) to develop and deploy applications for this model. There are no complex Python packages or C++ toolchains to install! See why we choose this tech stack.

Run mathstral locally and start a OpenAI-compatible API service

Step 1: Install WasmEdge via the following command line.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install_v2.sh | bash -s -- -v 0.13.5 --ggmlbn=b3259

Step 2: Download the Mathstral **** GGUF file using the command below. Since the size of the model is 5.14 G, it could take a while to download.

curl -LO https://huggingface.co/second-state/internlm2_5-7b-chat-GGUF/resolve/main/internlm2_5-7b-chat-Q5_K_M.gguf?download=true

Step 3: Download an API server app. It is also a cross-platform portable Wasm app that can run on many CPU and GPU devices.

curl -LO https://github.com/LlamaEdge/LlamaEdge/releases/latest/download/llama-api-server.wasm

Step 4: use the following command lines to start an API server for the model.

wasmedge --dir .:. --nn-preload default:GGML:AUTO:mathstral-7B-v0.1-Q5_K_M.gguf \

llama-api-server.wasm \

--prompt-template mistral-instruct \

--ctx-size 32000 \

--batch-size 128 \

--model-name mathstral-7B-v0.1

From another terminal, you can interact with the API server using curl.

curl -X POST http://localhost:8080/v1/chat/completions \

-H 'accept:application/json' \

-H 'Content-Type: application/json' \

-d '{"messages":[{"role":"system", "content": "You are a sentient, superintelligent math teahcher, here to teach and assist me."}, {"role":"user", "content": "Whiche one is bigger - 9.11 or 9.9?"}], "model":"mathstral-7B-v0.1"}'

And the response is the following.

{"id":"chatcmpl-9d0c3166-3fc8-4744-8b5c-08d900c39d24","object":"chat.completion","created":1721228452,"model":"mathstral-7B-v0.1-Q5_K_M","choices":[{"index":0,"message":{"content":"1. Identify the given numbers: 9.11 and 9.9\n\n2. Compare the integer parts of both numbers. In this case, the integer part is the number before the decimal point.\n\n - For 9.11, the integer part is 9.\n - For 9.9, the integer part is also 9.\n\n3. Since the integer parts are equal, we need to compare the decimal parts (the numbers after the decimal point).\n\n4. Compare the first digit after the decimal point of both numbers.\n\n - For 9.11, the first digit after the decimal point is 1.\n - For 9.9, the first digit after the decimal point is also 1.\n\n5. The second digit after the decimal point for 9.11 is 1, while for 9.9 it does not exist, because the number only has one digit after the decimal point.\n\n6. Since 1 (from 9.11) is greater than 0 (implicitly, as there's no second digit in 9.9), we can conclude that:\n\n$$9.11 > 9.9$$\n\n7. Therefore, the final answer is:\n\n$$\\boxed{9.11}$$","tool_calls":[],"role":"assistant"},"finish_reason":"stop","logprobs":null}],"usage":{"prompt_tokens":26,"completion_tokens":293,"total_tokens":319}}

The 7B mathstral model answers the math common sense question perfectly with the correct reasoning. It fits a trend we have observed over and over — finetuned open source models can outperform much larger closed source models in specialized areas.

Offer mathstral as a service

The above steps show how to run the mathstral model locally. But I also want to share it with my friends, perhaps even with my own math knowledge as the context. The GaiaNet project allows you to do exactly that.

First, we will need to install the required software.

curl -sSfL 'https://github.com/GaiaNet-AI/gaianet-node/releases/latest/download/install.sh' | bash

Then, we can use the following command line to download the model.

gaianet init --config https://raw.githubusercontent.com/GaiaNet-AI/node-configs/main/mathstral-7b/config.json

Next, let’s use gaianet start to start running the mathstral model. It is served over an OpenAI compatible API endpoint, as well as an web-based chatbot UI.

gaianet start

Then, you can open the url printed on the terminal in the browser to chat with the mathstral-7b model.

GaiaNet does much more than running and serving a local LLM. It allows you to manipulate the prompts, add contexts, and even incorporate a proprietary knowledge base to ground the LLM answers in truth. Check it out!