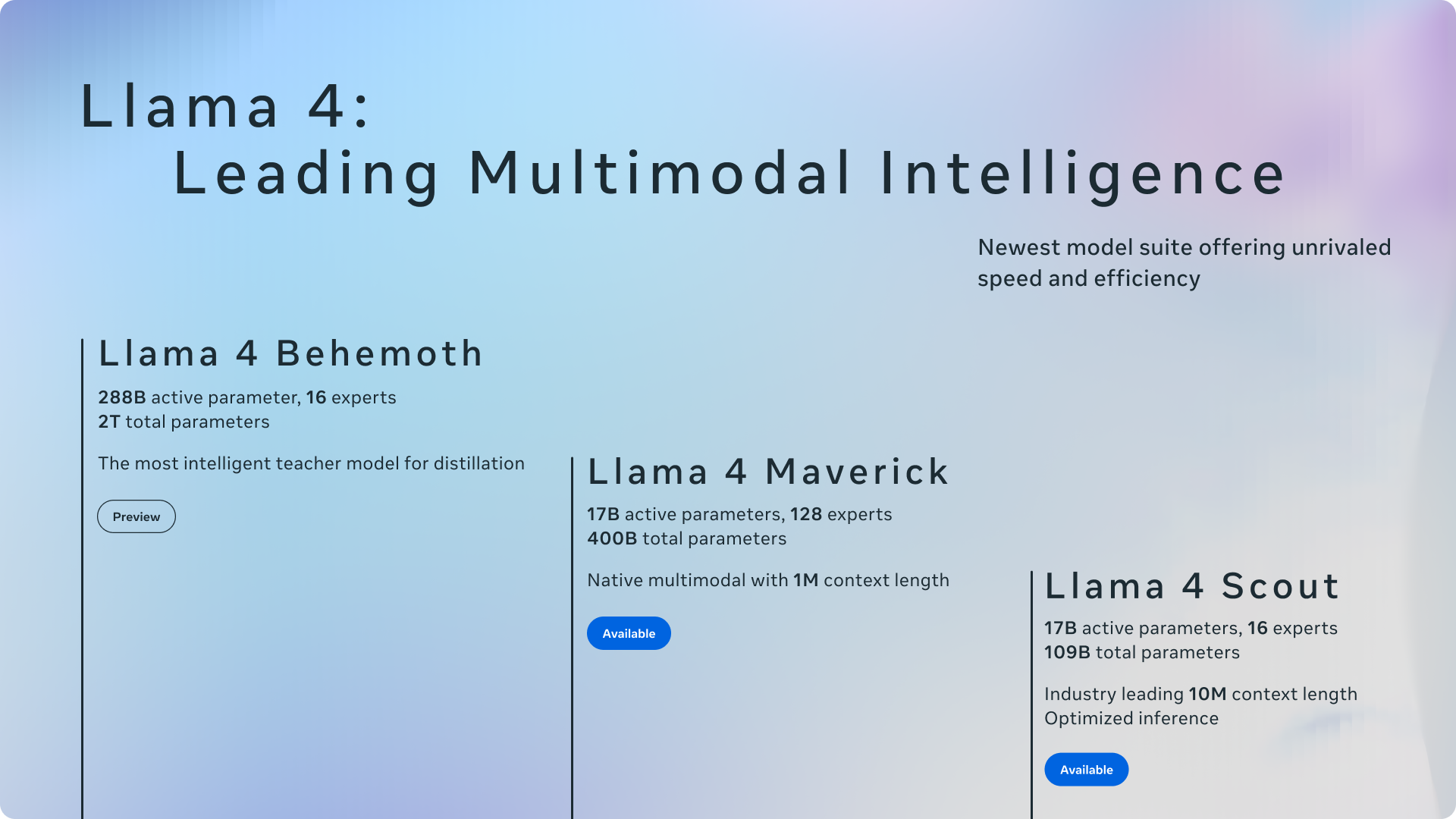

Meta AI has once again pushed the boundaries of open-source large language models with the unveiling of Llama 4. This latest iteration builds upon the successes of its predecessors, introducing a new era of natively multimodal AI innovation.

Llama 4 arrives with a suite of models, with Llama 4 Scout and Llama 4 Maverick firstly launched and 2 more coming, each engineered for leading intelligence and unparalleled efficiency. This series boasts native multimodality, mixture-of-experts architectures, and remarkably long context windows of 10 million tokens, promising significant leaps in performance and broader accessibility for developers and enterprises alike. Quoting Mark Zuckerburg, Llama 4 Scout is “extremely fast, natively multi-modal, and runs on a single GPU”.

In this article, we will cover how to run and interact with Llama-4-Scout-17B-16E-Instruct-GGUF on your own edge device on your own edge device.

We will use the Rust + Wasm stack to develop and deploy applications for this model. There are no complex Python packages or C++ toolchains to install! See why we choose this tech stack.

Run Llama-4-Scout-17B-16E-Instruct-GGUF

Step 1: Install WasmEdge via the following command line.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install_v2.sh | bash -s -- -v 0.14.1

Step 2: Download the Quantized Llama 4 Model

The model is 39.6 GB in size and it will take time to download the model . If you want to run a different model, you will need to change the model download link below.

curl -LO https://huggingface.co/second-state/Llama-4-Scout-17B-16E-Instruct-GGUF/resolve/main/Llama-4-Scout-17B-16E-Instruct-Q2_K.gguf

Step 3: Download the LlamaEdge API server

It is a cross-platform portable Wasm app that can run on many CPU and GPU devices.

curl -LO https://github.com/LlamaEdge/LlamaEdge/releases/latest/download/llama-api-server.wasm

Step 4: Download the Chatbot UI to interact with the Llama-4 model in the browser.

curl -LO https://github.com/LlamaEdge/chatbot-ui/releases/latest/download/chatbot-ui.tar.gz

tar xzf chatbot-ui.tar.gz

rm chatbot-ui.tar.gz

Next, use the following command lines to start a LlamaEdge API server for the model. LlamaEdge provides an OpenAI compatible API, and you can connect any chatbot client or agent to it! Copy

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Llama-4-Scout-17B-16E-Instruct-Q5_K_M.gguf \

llama-api-server.wasm \

--prompt-template llama-4-chat \

--ctx-size 4096 \

--model-name Llama-4-Scout

Chat

Visit http://localhost:8080 in your browser to interact with Llama-4!

As you can see, the system prompt works. The model responds to our request as a Rust developer.

Use the API

The LlamaEdge API server is fully compatible with OpenAI API specs. You can send an API request to the model.

curl -X POST http://localhost:8080/v1/chat/completions \

-H 'accept:application/json' \

-H 'Content-Type: application/json' \

-d '{"messages":[{"role":"system", "content": "You are a helpful assistant. Answer as concise as possible"}, {"role":"user", "content": "Can a person be at the North Pole and the South Pole at the same time??"}], "model": "Gemma-3-1b"}'

{"id":"chatcmpl-809db913-3efb-47e1-99eb-779917e5545f","object":"chat.completion","created":1742307745,"model":"Llama-4-Scout","choices":[{"index":0,"message":{"content":"No, a person cannot be at both the North Pole and the South Pole simultaneously. They are located on opposite hemispheres of the Earth.","role":"assistant"},"finish_reason":"stop","logprobs":null}],"usage":{"prompt_tokens":38,"completion_tokens":29,"total_tokens":67}}%

RAG and Embeddings

Finally, if you are using this model to create agentic or RAG applications, you will likely need an API to compute vector embeddings for the user request text. That can be done by adding an embedding model to the LlamaEdge API server. Learn how this is done.

Gaia

Alternatively, the Gaia network software allows you to stand up the Mistral LLM, embedding model, and a vector knowledge base in a single command. Try it with Llama-4!

Join the WasmEdge discord to share insights. Any questions about getting this model running? Please go to second-state/LlamaEdge to raise an issue or book a demo with us to enjoy your own LLMs across devices!