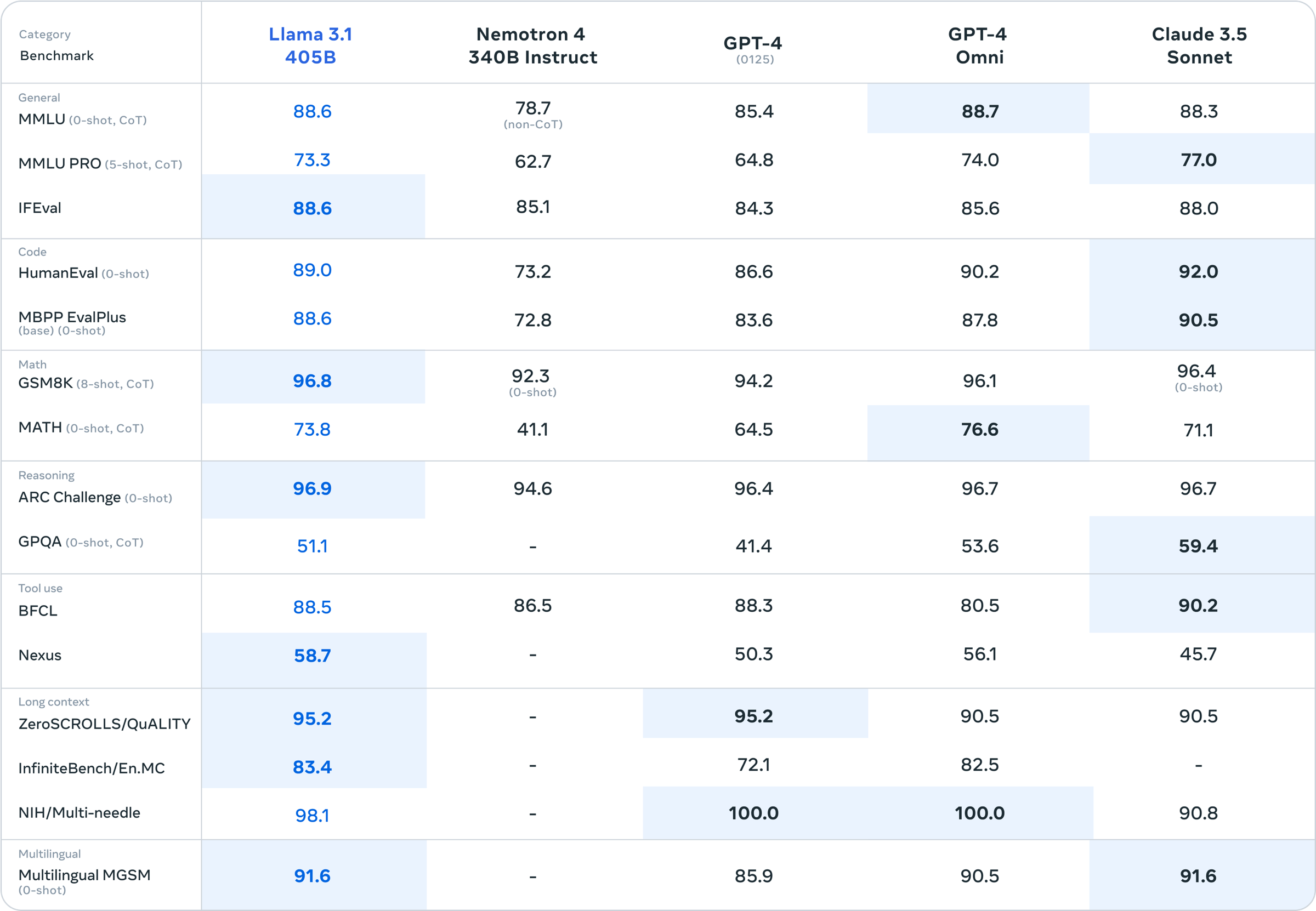

The newly released Llama 3.1 series of LLMs are Meta’s “most capable models to date”. The largest 405B model is the first open source LLM to match or exceed the performance of SOTA closed-source models such as GPT-4o and Claude 3.5 Sonnet.

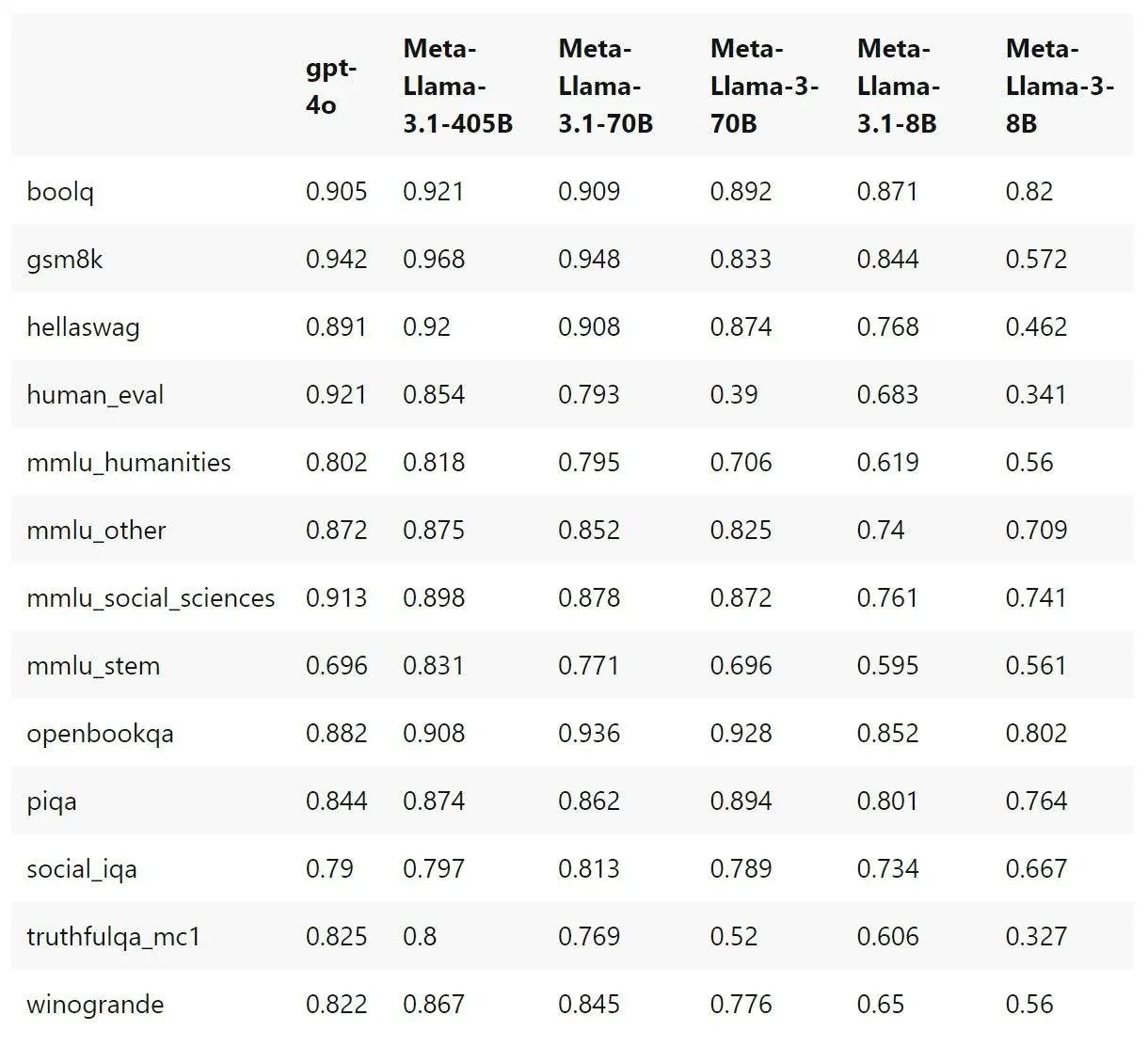

While the 405B model is probably too big for personal computers, Meta has used it to further train and finetune smaller Llama 3 models. The results are spectacular! Compared with Llama 3 8B, the Llama 3.1 8B model not only sees a significant jump in benchmark scores across the board, but also supports a much longer context length (128k vs 8k). For LlamaEdge users who are running Llama 3 models, especially in RAG and agent applications, these are compelling reasons to upgrade!

In this article, we will cover

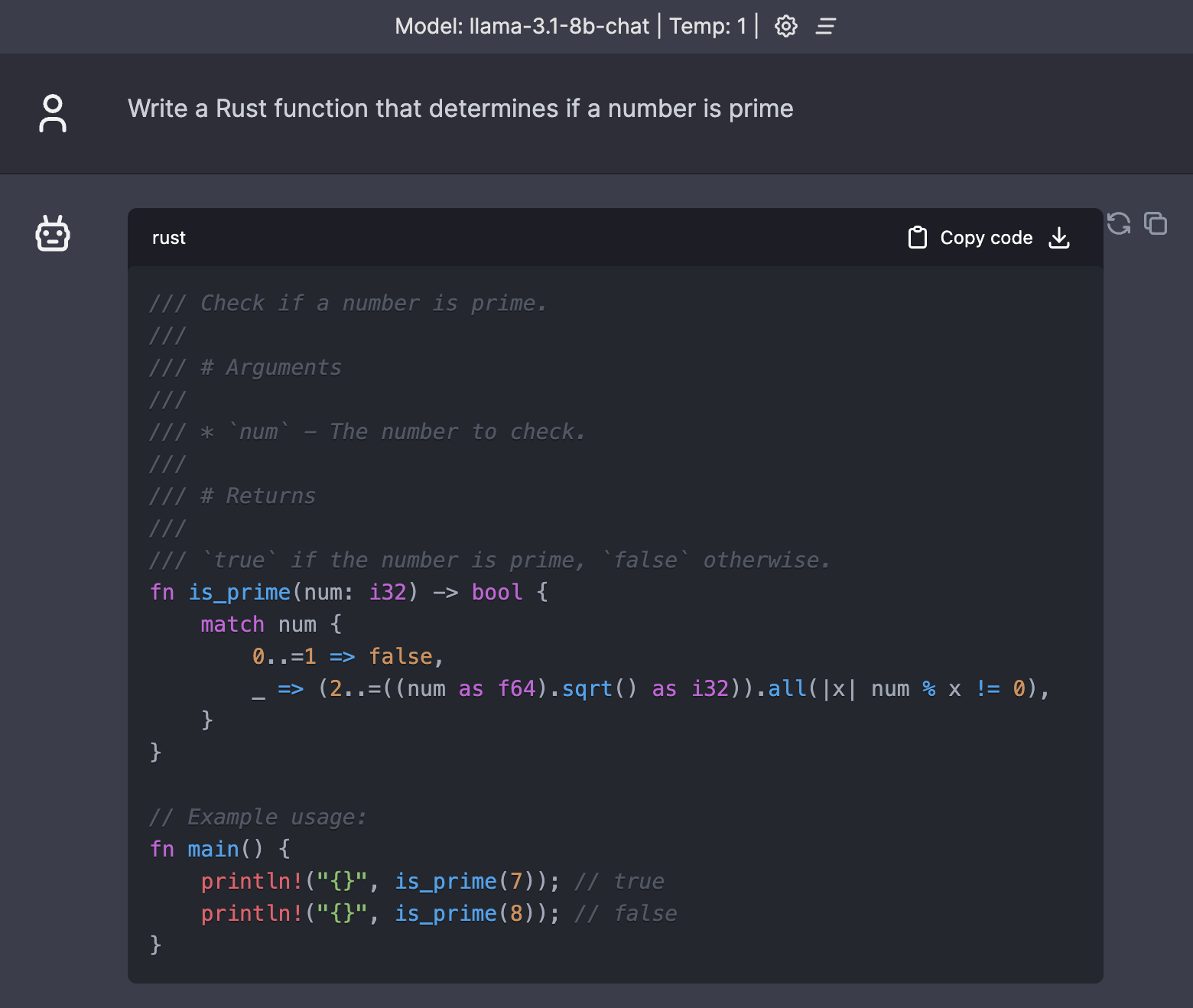

- How to run the Llama 3.1 8B model locally as a chatbot

- A drop-in replacement for OpenAI in your apps or agents

We will use LlamaEdge (the Rust + Wasm stack) to develop and deploy applications for this model. There are no complex Python packages or C++ toolchains to install! See why we choose this tech stack.

Run Llama-3.1-8B locally

Step 1: Install WasmEdge via the following command line.

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install_v2.sh | bash -s -- -v 0.13.5

Step 2: Download the Meta-Llama-3.1-8B GGUF file. Since the size of the model is 5.73G, it could take a while to download.

curl -LO https://huggingface.co/second-state/Meta-Llama-3.1-8B-Instruct-GGUF/resolve/main/Meta-Llama-3.1-8B-Instruct-Q5_K_M.gguf

Step 3: Download the LlamaEdge API server app. It is also a cross-platform portable Wasm app that can run on many CPU and GPU devices.

curl -LO https://github.com/LlamaEdge/LlamaEdge/releases/latest/download/llama-api-server.wasm

Step 4: Download the chatbot UI for interacting with the Llama-3.1-8B model in the browser.

curl -LO https://github.com/LlamaEdge/chatbot-ui/releases/latest/download/chatbot-ui.tar.gz

tar xzf chatbot-ui.tar.gz

rm chatbot-ui.tar.gz

Next, use the following command lines to start an LlamaEdge API server for the model.

wasmedge --dir .:. --nn-preload default:GGML:AUTO:Meta-Llama-3.1-8B-Instruct-Q5_K_M.gguf \

llama-api-server.wasm \

--prompt-template llama-3-chat \

--ctx-size 32768 \

--batch-size 128 \

--model-name llama-3.1-8b-chat

We are using a 32k (32768) context size here instead of the full 128k due to the RAM constrains of typical personal computers. If your computer has less RAM than 16GB, you might need to adjust it down even further.

Then, open your browser to http://localhost:8080 to start the chat!

A drop-in replacement for OpenAI

LlamaEdge is lightweight and does not require a daemon or sudo process to run. It can be easily embedded into your own apps! With support for both chat and embedding models, LlamaEdge could become an OpenAI API replacement right inside your app on the local computer!

Next, we will show you how to start a full API server for the Llama-3.1-8B model along with an embedding model. The API server will have chat/completions and embeddings endpoints. In addition to the steps in the previous section, we will also need to:

Step 5: Download an embedding model.

curl -LO https://huggingface.co/second-state/Nomic-embed-text-v1.5-Embedding-GGUF/resolve/main/nomic-embed-text-v1.5.f16.gguf

Then, we can use the following command line to start the LlamaEdge API server with both chat and embedding models. For more detailed explanation, check out the doc Start a LlamaEdge API service.

wasmedge --dir .:. \

--nn-preload default:GGML:AUTO:Meta-Llama-3.1-8B-Instruct-Q5_K_M.gguf \

--nn-preload embedding:GGML:AUTO:nomic-embed-text-v1.5.f16.gguf \

llama-api-server.wasm \

--model-alias default,embedding \

--model-name llama-3.1-8b-chat,nomic-embed \

--prompt-template llama-3-chat,embedding \

--batch-size 128,8192 \

--ctx-size 32768,8192

Finally, you can follow these tutorials to integrate the LlamaEdge API server as a drop-in replacement for OpenAI with other agent frameworks. Specially, use the following values in your app or agent configuration to replace the OpenAI API.

| Config option | Value |

|---|---|

| Base API URL | http://localhost:8080/v1 |

| Model Name (for LLM) | llama-3.1-8b-chat |

| Model Name (for Text embedding) | nomic-embed |

Conclusion

Meta continues to lead the innovation in open source AI. With Llama 3.1, it has caught up the best closed source models. The Llama 3.1 models also show significant improvements in both quality and context size over Llama 3 models. Best of all? All the Llama 3.1 models are supported out of the box on LlamaEdge applications. Upgrade to Llama 3.1 today!

Learn more from the LlamaEdge docs. Join the WasmEdge discord to ask questions and share insights.