In the previous article, we discussed how WebAssembly could tie together native TensorFlow, Node.js JavaScript, and Rust functions to create high performance and safe web services for AI.

In this article, we will discuss how to apply this approach to ImageNet's MobileNet image classification model, and more importantly, how to create web applications for your own retrained MobileNet models.

NOTE

This article demonstrates how to call operating system native programs from the SSVM. If you are primarily interested in running Tensorflow models from Rust programs in SSVM, you should check out the SSVM Tensorflow WASI tutorials. It has a much more ergonomic API, and is faster.

Prerequisites

you will need to install Rust, Node.js, the Second State WebAssembly VM, and the ssvmup tool. Check out the instruction for the steps, or simply use our Docker image. You will also need the TensorFlow library on your machine.

$ wget https://storage.googleapis.com/tensorflow/libtensorflow/libtensorflow-gpu-linux-x86_64-1.15.0.tar.gz

$ sudo tar -C /usr/ -xzf libtensorflow-gpu-linux-x86_64-1.15.0.tar.gz

Getting started

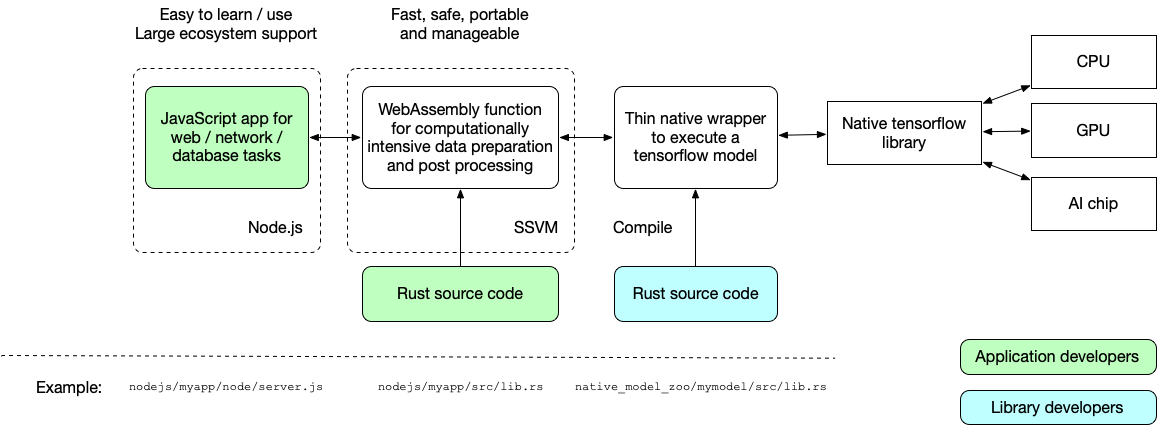

Our application has three parts.

- a Node.js application that handles the web UI;

- a Rust function compiled into WebAssembly to perform computational tasks such as data preparation and post-processing; and

- a thin native wrapper, also written in Rust, around the native Tensorflow library to execute the model.

To get started with the demo, we start from the native TensorFlow wrapper. Go to this directory and do the following to build and install the wrapper.

$ cargo install --path .

Next, let's build the WebAssembly function that does most of the data processing computation. It calls the aforementioned native wrapper to perform TensorFlow operations. Go to this directory and do the following.

$ ssvmup build

Finally, you can start the Node.js application.

$ cd node

$ node server.js

Go to http://localhost:8080/, submit an image, and the web service will try to recognize it!

Code walk-through

To see how the application works, let's start with the Node.js JavaScript layer. The Node.js application handles the file uploading and the response.

app.post('/infer', function (req, res) {

let image_file = req.files.image_file;

// Call the infer() function in WebAssembly

var result = JSON.parse( infer(image_file.data) );

res.send('Detected <b>' + result[0] + '</b> with <u>' + result[1] + '</u> confidence.');

});

But as you can see, it simply passes the image data to the infer() function and then returns the response. The infer() function is written in Rust and compiled into WebAssembly so that it can be called from JavaScript.

The infer() function resizes and flattens the input image data into an array. The MobileNet model requires the input image to be fixed at 224x224. It sets up a tensorflow model, and use the flattened image data as input to the model. The TensorFlow model execution returns an array of 1000 numbers. Each number corresponds to a label category in the imagenet_slim_labels.txt file, such as “ostrich” or “picket fence”. The number is the probability of the image containing the labeled object. The function sorts and selects the label with the highest probability, and returns the label text and the confidence level.

#[wasm_bindgen]

pub fn infer(image_data: &[u8]) -> String {

let img = image::load_from_memory(image_data).unwrap().to_rgb();

let resized = image::imageops::resize(&img, 224, 224, ::image::imageops::FilterType::Triangle);

// Call the native wrapper program to run the tensorflow model

let mut cmd = Command::new("image_classification_mobilenet_v2_14_224");

// The input image data is passed to the native program via STDIN

for rgb in resized.pixels() {

cmd.stdin_u8(rgb[0] as u8)

.stdin_u8(rgb[1] as u8)

.stdin_u8(rgb[2] as u8);

}

let out = cmd.output();

// The return value from the native wrapper is a JSON string via STDOUT

let stdout_json: Value = from_str(str::from_utf8(&out.stdout).expect("[]")).unwrap();

let stdout_vec = stdout_json.as_array().unwrap();

let mut i = 0;

let mut max_index: i32 = -1;

let mut max_value: f64 = -1.0;

while i < stdout_vec.len() {

let cur = stdout_vec[i].as_f64().unwrap();

if cur > max_value {

max_value = cur;

max_index = i as i32;

}

i += 1;

}

let mut confidence = "low";

if max_value > 0.75 {

confidence = "very high";

} else if max_value > 0.5 {

confidence = "high";

} else if max_value > 0.2 {

confidence = "medium";

}

let labels = include_str!("imagenet_slim_labels.txt");

let mut label_lines = labels.lines();

for _i in 0..max_index {

label_lines.next();

}

let ret: (String, String) = (label_lines.next().unwrap().to_string(), confidence.to_string());

return serde_json::to_string(&ret).unwrap();

}

The image_classification_mobilenet_v2_14_224 command runs the mobilenet_v2_1.4_224_frozen.pb tensorflow model in native code. The image data, which is prepared as flattened RGB values, is passed in from the WebAssembly infer() via STDIN. The result from the model is encoded in JSON and returned via the STDOUT.

Notice how we passed the image data to the model through a tensor named input, and then used the MobilenetV2/Predictions/Softmax tensor to retrieve the probabilities array.

fn main() -> Result<(), Box<dyn Error>> {

let mut buffer: Vec<u8> = Vec::new();

let mut flattened: Vec<f32> = Vec::new();

// The input image data is passed in via STDIN

io::stdin().read_to_end(&mut buffer)?;

for num in buffer {

flattened.push(num as f32 / 255.);

}

// Create the tensorflow model from a frozen saved model file

let model = include_bytes!("mobilenet_v2_1.4_224_frozen.pb");

let mut graph = Graph::new();

graph.import_graph_def(&*model, &ImportGraphDefOptions::new())?;

let mut args = SessionRunArgs::new();

// Add the input image to the input placeholder

let input = Tensor::new(&[1, 224, 224, 3]).with_values(&flattened)?;

args.add_feed(&graph.operation_by_name_required("input")?, 0, &input);

// Request the following outputs after the session runs.

let prediction = args.request_fetch(&graph.operation_by_name_required("MobilenetV2/Predictions/Softmax")?, 0);

let session = Session::new(&SessionOptions::new(), &graph)?;

session.run(&mut args)?;

// Get the result tensor after the model runs

let prediction_res: Tensor<f32> = args.fetch(prediction)?;

// Send results to STDOUT

let mut i = 0;

let mut json_vec: Vec<f32> = Vec::new();

while i < prediction_res.len() {

json_vec.push(prediction_res[i]);

i += 1;

}

let json_obj = json!(json_vec);

// Writes to STDOUT

println!("{}", json_obj.to_string());

Ok(())

}

Our goal is to create native execution wrappers for common AI models so that developers can just use them as libraries.

MobileNet as a Service

So far, we have seen how to use the “standard” MobileNet model to do image classification. But a central feature of MobileNet is that the model can be retrained with your own data. It allows the service to classify images and labels that are relevant to your application. In this case, we need to change the Rust wrapper program to handle any MobileNet-like models. The example is here. Let's see how it works.

The Node.js application now takes three files.

- A frozen retrained MobileNet model file

- A-labels file

- The image to be classified

app.post('/infer', function (req, res) {

let model_file = req.files.model_file;

let label_file = req.files.label_file;

let image_file = req.files.image_file;

// The Node.js app calls the infer() method in WebAssembly

var result = JSON.parse( infer(model_file.data, label_file.data, image_file.data) );

res.send('Detected <b>' + result[0] + '</b> with <u>' + result[1] + '</u> confidence.');

});

The infer() function is a Rust function compiled to WebAssembly. It takes those three files and calls the native mobilenet_v2 command. The command takes the following parameters.

- The length of the model file

- The tensor name for the input parameter for the input image data

- The tensor name for the output parameter for the probabilities for each label

- Image width

- Image height

The model data and the input image data for both passed to the command via STDIN. The command outputs a JSON array for the probabilities for each label via STDOUT.

#[wasm_bindgen]

pub fn infer(model_data: &[u8], label_data: &[u8], image_data: &[u8]) -> String {

let img = image::load_from_memory(image_data).unwrap().to_rgb();

let resized = image::imageops::resize(&img, 224, 224, ::image::imageops::FilterType::Triangle);

// Call the native wrapper program to run the tensorflow model

let mut cmd = Command::new("mobilenet_v2");

// Pass some arguments to the model such as the names of the input and output tensors

cmd.arg(model_data.len().to_string())

.arg("input")

.arg("MobilenetV2/Predictions/Softmax")

.arg("224")

.arg("224");

// The model itself is passed via STDIN to the native wrapper program

for m in model_data {

cmd.stdin_u8(*m);

}

// The input image data to the model is also passed via STDIN

for rgb in resized.pixels() {

cmd.stdin_u8(rgb[0] as u8)

.stdin_u8(rgb[1] as u8)

.stdin_u8(rgb[2] as u8);

}

let out = cmd.output();

... find the highest probability in the output array ...

... look up the label for the highest probability ...

}

The native mobilenet_v2 command takes the input parameters, and the model and image data from STDIN. It loads the model, set the named input tensor to the image data, performs TensorFlow model operations, and returns the results from the named output tensor.

fn main() -> Result<(), Box<dyn Error>> {

// Get the arguments passed into this native wrapper program

let args: Vec<String> = env::args().collect();

let model_size: u64 = args[1].parse::<u64>().unwrap();

let model_input: &str = &args[2];

let model_output: &str = &args[3];

let img_width: u64 = args[4].parse::<u64>().unwrap();

let img_height: u64 = args[5].parse::<u64>().unwrap();

let mut buffer: Vec<u8> = Vec::new();

let mut model: Vec<u8> = Vec::new();

let mut flattened: Vec<f32> = Vec::new();

// Reads the data for model itself from STDIN

let mut i : u64 = 0;

io::stdin().read_to_end(&mut buffer)?;

for num in buffer {

if i < model_size {

model.push(num);

} else {

flattened.push(num as f32 / 255.);

}

i = i + 1;

}

// Create a tensorflow model from the mode data

let mut graph = Graph::new();

graph.import_graph_def(&*model, &ImportGraphDefOptions::new())?;

let mut args = SessionRunArgs::new();

// The `input` tensor expects BGR pixel data.

let input = Tensor::new(&[1, img_width, img_height, 3]).with_values(&flattened)?;

args.add_feed(&graph.operation_by_name_required(model_input)?, 0, &input);

// Request the following outputs after the session runs.

let prediction = args.request_fetch(&graph.operation_by_name_required(model_output)?, 0);

let session = Session::new(&SessionOptions::new(), &graph)?;

session.run(&mut args)?;

// Get the results from the named tensor after the model runs

let prediction_res: Tensor<f32> = args.fetch(prediction)?;

// Return the results from the native wrapper app to the calling WebAssembly function

let mut i = 0;

let mut json_vec: Vec<f32> = Vec::new();

while i < prediction_res.len() {

json_vec.push(prediction_res[i]);

i += 1;

}

let json_obj = json!(json_vec);

// Write data into STDOUT

println!("{}", json_obj.to_string());

Ok(())

}

What's next

WebAssembly is fast, safe, and portable. It seamlessly integrates into the JavaScript and Node.js ecosystem. In this tutorial, we demonstrated how to create a high-performance AI-as-a-Service that can run image classification on any MobileNet models. What are you waiting for? Contribute your own wrapper to our model zoo!